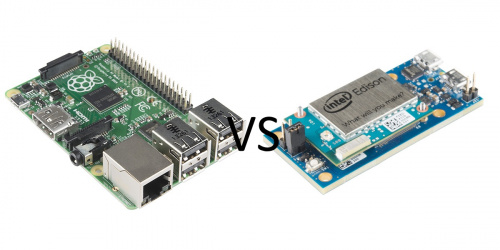

A lot of people have been (usually unfavorably) comparing the Edison to single board computers like the Raspberry Pi.

Let's do a little A:B comparison, shall we?

Raspberry Pi vs. Edison Feature Comparison

| Raspberry Pi B+ | Intel Edison | |

|---|---|---|

| Price | $35.95 | $74.95* |

| Video | HDMI, Direct LCD, Composite | None |

| Audio | Stereo output | I2S Out |

| Flash | microSD socket | SDIO interface; 4GB onboard flash |

| RAM | 512MB | 1GB |

| Processor | 700MHz ARM1176JZF-S | 500MHz Dual-core Atom processor; 100MHz Quark MCU |

| GPIO | 27 pins on 0.1" headers | 70 pins on 0.4mm mezzanine header |

| Ethernet | 10/100 onboard | None |

| WiFi | None | Dual-band 802.11 (a/b/g/n) |

| Bluetooth | None | Bluetooth 2.1/4.0 |

| USB | 4 ports | 1 USB-OTG |

| Other interfaces | SPI, UART, I2C | SPI, UART, I2C, PWM |

| Power Consumption | 5V * 600mA (~3W) | 3.3V - 4.5V @ <1W |

| Dimensions | 85mm x 56mm x 19.5mm | 60mm x 29mm x 8mm* |

* The above dimensions and prices reflect the Edison with the mini breakout board.

I've been watching lots of comment channels regarding the Edison, and I see a lot of people slamming the Edison as compared to the Raspberry Pi over a few of the lines above:

- lack of USB ("Where am I going to plug in my keyboard and mouse?")

- lack of video

- processor speed

- cost

- the I/O connector is impossible to use without an extra board

All five of those points would be valid criticisms if the Edison were a single board computer like the Raspberry Pi. The Raspberry Pi is, and always has been, aimed at providing a low-cost computing terminal that can be deployed to as a teaching tool. Any hardware hackability on the platform has been purely incidental, a bonus feature.

The Edison, on the other hand, is meant to be a deeply embedded IoT computing module. There's no video because your WiFi enabled robot doesn't need video. There's only one USB port because wearables don't need a keyboard and mouse. The processor speed is lower because for portable applications power consumption is important (and you can see above just how much better the Edison is than the Raspberry Pi on that front).

As for cost, yes, the Edison loses big time, until you add the cost of an SD card, a WiFi dongle, and a Bluetooth dongle. That brings the prices much closer to parity, although still definitely not equal.

Finally, the last point: that connector makes a lot more sense when you stop thinking of this as a competitor to the Raspberry Pi and start thinking of it as a competitor to, say, a bare ARM A9 or A11 SoC. The production requirements for any high-end SoC are pretty brutal: micro BGA packages are pretty unforgiving. Integrating the Edison into a product is much easier, and has the benefit of giving you WiFi and Bluetooth (with and FCC ID!) to boot!

Three other things I want to highlight: the presence of the Quark microcontroller in the Edison, the operating voltage of the Edison, and the size of the Edison. The Raspberry Pi, without adding wireless communications devices, requires ~93 cubic centimeters of volume. The Edison and the mini breakout together require ~14. Footprint wise, the Pi needs ~48 square centimeters to the Edison's ~17. That's a pretty serious difference.

The operating voltage of the Edison is perfect for single-cell LiPo operation; it even has a built-in battery charge controller. The Raspberry Pi, on the other hand, requires 5V, so you'll need some kind of boost circuit or bulky battery pack to power it.

Last but not least, consider the presence of the Quark onboard the Edison. While not supported currently, future software releases from Intel will enable this core, allowing real-time processes to run independently from the Linux core, which can be very important in embedded systems with a high cost of failure. It also ensures that stringent real-time applications can be handled without requiring a real-time Linux kernel.

A much more reasonable comparison might be to stack up the Edison against the Raspberry Pi Compute Module; I'll leave that as an exercise to the reader.

The final point I'm going to make: the Edison can be used with the Arduino IDE, but you'll get the most mileage out of it by using other programming methods. However, those other methods (it supports GCC and Python, and Node.js, RTOS and Visual Programming will follow soon) are not for beginners. We will, of course, be adding tutorials, projects and examples soon- especially once our Blocks start to hit the storefront!

Let us leave the trolls behind and code bravely into a better future.

Intel's competitor for the RPi is the Galileo. As Intel has said about the Edison launch, it was designed for the IoT on purpose. This means a lot of subsystems that the RPi has will not be on the Edison for that reason.

It's nice to see a big company like Intel try to help teach STEM and provide the platforms to help it in that area. Can't go wrong with more competition on low cost development boards.

Time Lord sgrace - we miss you! (also.. what you said)

Well, they accidentally put this article up last week and I was able to comment on it before they took it down! Yeah, work has been killer since I just got onto an OpenCL project, so in a few weeks I should be able to return.

I can't understand how some people think the huge variety of development boards that are available, is anything but a good thing. For me, as well as the hardware capabilities, the most important things are the manufacturer's documentation, and the community that gathers around the boards. It'll be interesting to see how the Edison fares with both of those. I think getting SparkFun on board is a great way to get the community up and running! It seems to me that open documentation, especially around the graphics and video capabilities of their chips, will be Intel's best way to combat ARM's domination in this end of the market. ARM themselves, as well as Qualcomm, Samsung, nVidia, AllWinner, Rockchip, Imagination Technology etc are stuck in the "no open-source, binary blobs only" world; only Freescale seems to be committed to openness and their chips aren't terribly popular. Ooops, I'm waffling... in short, I look forward to seeing the quality of the documentation around this board before I commit to it.

Rob, I'd offer an alternate perspective on the profusion of development boards -- dilution of developer attention.

Perl and Arduino both became wildly successful not because of technical superiority (and I'm actually a fan of the design of both of them) but because of the vast amounts of library and sample code developed and shared for each of those platforms.

Granted, more folks are playing with microcontrollers and development systems today than ever before, so it's a bigger game. But the greater the number of development boards on the market, the fewer will have large communities surrounding them and the fewer will have large repositories to reduce the barrier to entry.

Just another perspective.

clap I can see the Edison being an elegant solution in places the RPi doesn't quite fit... mainly wearable and mobile platforms. They don't overlap much in my mind.

I think the other big difference is knowledge barrier-to-entry at the moment. RPi has amazing documentation and is easy to start out. Edison is new and has had a very lackluster documentation out of the gate. It will pick up if it develops a community, but judging the backlash I'm worried. I'm hoping Sparkfun can help turn that around. (pleeeease... no pressure :-P )

We will. Yes, the Pi has a lot more documentation out there, BUT was that true less than one month after it released? Probably not.

We're working on projects, libraries, examples, and all kinds of great stuff to go with it. No fear there.

Most chip/board manufacturers have very little external documentation at launch of a product. I could give a bunch of reasons why, but it's generally the documentation writers are waiting for those that worked on the product to give them examples, demos, and help write the documentation. As of right now, I have to review 5 major documentations for new features of my company's software release, but other things are taking a higher priority than the documentation. (It's the way the world is).

Documentation will always get better over time, so it's just a waiting game.

BealgeBoneBlack compares a lot better. it too can be powered from a lipo pack directly and integrates a battery charge IC. it's not quite as power efficient at 2.3W peak and 1.05W idle, but it does have a lot more IOs including multiple UARTS and i2c and SPI buses, and PRUs that are accessible right now. And it has the USB, HDMI, and ethernet that some people want. And it's $55, not including BT or WIFI. But really, your typical robot doens't need BT and wifi , and often neither.

the rPi is a fine cheap little linux desktop, but it's really poorly spec'd in comparison to everything else out there. it's cheap, and that's really its only advantage.

The BBB technically can be powered directly from a single-cell LiPo, but it's not ideal: not considering the high power consumption, one of the 3.3V rails is driven by an LDO with 500mV dropout at peak load, and AFAIK you have to directly solder the battery leads and safety thermistor/resistor to the board, so swapping out different-sized batteries isn't practical.

chrwei said:

Actually your typical robot does need some non-teathered controller for human training and piloting. While some try that with IR remotes, that's a crock ... running it from an ap on your smart phones is far more practical, just like some of the more fun quad copters.

The RPi is a SBC -- Single Board Computer.

The intel Edison is a SoC -- System on a Chip.

SoC devices are typically smaller, more powerful, and use less power than any SBC. The tradeoff is that it's an barebones platform/framework that you choose how to integrate. It's not a computer in the sense that the RPi is.

The Edison is more rightly compared with Freescale's SoC offerings.

A more direct comparison is to the RasPi Compute Module: http://www.raspberrypi.org/raspberry-pi-compute-module-new-product/. I believe the Edison is more powerful, smaller, and similarly priced.

The viewpoint that the Edison's CPU is less powerful because it's core speed is lower is extremely uneducated, benchmarks (ie http://www.davidhunt.ie/raspberry-pi-beaglebone-black-intel-edison-benchmarked/ ) have put the edison around 5 times faster in CPU terms which makes this an extremely misleading article. It would be nice if someone could fix it for the author and maybe explain to them why they shouldn't judge different architecutres on core speed.

Geez - everyone talks about "wearables" like it's a huge market or something. I'm 50, so I'm "old" by today's standards, but do people really get excited today about wearing shirts with lots of flashing LEDs sewn onto them?

Good question. I think wearables is less likely LEDs in a T-shirt and more often, costumes, bags, stuffed animals. Its amazing what you can do with "wearables". Build an accelerometer into a headband and track how much you move in your sleep, how much force a football helmet receives, or when an elderly person falls, it can call for help. We've seen an 8th grader put flex sensors in a glove which then translates hand movements into sign language and can print text. You can build a stuffed bear that has a built in MP3 player, or plays a recoding of mom singing a lullaby, or works as a baby monitor. "Wearables" is one of those think outside the box categories.

@everyone,

Comparing things like the Pi, the Edison, and even the Jetson TK1, is an interesting pastime, but saying that (for example), the Pi isn't a good platform for "robotics" (or whatever), because it runs Linux misses the point. In fact, the TK1 has a development O/S which is also a Linux re-spin.

As I notice from many of the prior comments, what you want to use really depends on what your specific application is.

You CAN do interesting things with the Pi, if you really want to. In order to do that - as is also true with the Edison and the TK1 - you have to code "bare metal". And yes, you can do that on the Pi too. If you REALLY want to be totally anal with your code, drop down into raw assembler. Code everything else in C/C++, and the really time-critical stuff in assembler.

Now, I don't want to come off like an absolute fan-boy of the Pi, but I think its being criticized for the wrong reasons. The Pi has more interfacing options than just a few GPIO pins. There's I2C, (for one), and a few other interesting interface methods too - and I read this out of a "for beginners" guide to the Pi.

I guess the bottom line for my rant is this: Never underestimate the power of human ingenuity. If you need to get something done, and you wish you had a TK1, but all you have is a Pi or an Edison, it's a better than even-money bet that you'll figure out how to do it.

What say ye?

Jim (JR)

Thank GAWD someone said it finally. I got similar reactions when I found out I was chosen to receive a Galileo: It's utter horse manure, better used as a doorstop, but not even good at that, etc. Really, it made me want to work harder to find uses for it.

"... if you judge a fish by its ability to climb a tree, it will live its whole life believing that it is stupid.” -Albert Einstein

I agree completely with this comparison. I have just received my first Edison, and i have not yet switched it on because i am waiting for the mating connector. Seen on my bench side by side with RPi the difference is incredible. RPi is a masterpiece, but it is absolutely not wearable. And Edison is a FULL FEATURED X86 machine, not a RISC ! The only problem of Edison is the pitch of the connector, 0.4 mm is too small. Up to 0.65 or 0.5 mm pitch, hand soldering is, if not easy, at least possible. Below difficulty increases quickly. Considering than there is still some room on the board, Edison should be more hobbist-friendly.

I'm looking at cost. I don't understand how the edison fits in with robot building at all. Current prices are Edison costs $55 GPIO connector $16 PWM $22

So we are already talking about close to $100 for just the electronics. The must be a cheaper solution, for that type of power. Maybe I'm misguided but I really think that Intell has priced themselves out of the hobby market.

Does anyone know what is the resolution of the Edison analog inputs? Is it 10bit, 12bit, 14bit, etc....? Any info would be helpful.

Edison has no native ADC inputs. The ADC block adds 12-bit conversion, though.

One thing they both have in common is the lack of documentation (or the disorganized way they post their documentation) when both came out. Seriously the Edison guys confound the masses by first showing how to get the "Blink" sketch to work and then post next step links that are all Native C++ code. Huh? What?? How do you go from Blink to showing IOT development with Eclipse and GDB?

If you want to build a robot for fun, and it doesn't have to get any actual work done, you can disregard the following. But if you want to build a serious robot, and you're considering the Edison, read on.

Power per watt is critical, CRITICAL, for robotics. You can't just add a battery to support the difference in power drain, because the motors need more current to support the additional weight. And soon, you'll need bigger motors, and more batteries again, and so on. It's the same downward spiral found in rocket science; adding fuel and engine capacity just to lift more fuel.

But the Intel Atoms have always been power hogs. This may well be the most efficient Atom Intel has released; but is it really better than a smartphone ARM SoC? I haven't crunched the numbers on the Edison, but traditionally, ARM and PowerPC chipsets do better. You should only choose an Edison if the convenience of an existing platform outweighs the improved performance of anything else, or if your project has a hard, absolute requirement for running X86 code. Generally speaking, no robotics company ships a robot with an X86 processor if they can help it.

A few of you are talking about processing video for a robot. In terms of time to completion and power efficiency, this is better done by a carefully selected GPU. Note that this almost certainly isn't the usual sort of GPU you would use to drive a display; a robot only needs a specific few kinds of video processing to be done, and never any of the more general purpose sort of processing used to render fancy graphics; a GPU that still includes that sort of support is wasting your power.

I think, ultimately, this is Intel's bid to try to tap into the smartphone market. A handy, approachable, smartphone development core. Sure, the speed is a little low, but it's enough to get a developer started.

So, what do I want from a company looking to develop a processor chipset for robotics? Multiple Simultaneous Interrupts. Sure, a CPU can have multiple cores, but those cores still sit on only ONE System Bus! Which means that only one interrupt can start being processed at a time. You want enough interrupt support so that if enough bad things happen at once, you can correctly deal with them all before the system fails to react in time. What chipset currently does this? None, that I know of. See, general purpose computing doesn't need this. It's strictly a robotics requirement. So a chip that does this probably won't be a mass-market chip, and will ultimately be more expensive. Unless of course, general computing requirements find some other reason to have a multi-channel switched fabric.

These are variants of the Silvermont core built on 22nm. Their Performance/Watt compares quite favorably to Cortex A15.

The older Atoms were always very competitive on the actual CPU core, it was the rest of the chipset that was terrible. Silvermont fixed that a good bit by finally moving to legitimate SoC; Silvermont based tablets got comparable battery life to their Android/iOS equivalents, and Windows 8 is more resource-intensive than Android/iOS.

Intel has many, many development boards targeted specifically at Smartphone/Tablet manifs. A board with no GPU is pretty much useless for any smartphone/tablet dev. Modern Android/Windows both require a GPU to even boot.

Also, I think you're severely mistaken if you think that no robotics company ships an x86 packing robot if they can help it. Nearly every robot at the company I work for runs at least one, frequently many more than one, Intel CPU. The power draw of the CPU is absolutely dwarfed by the power draw of the rest of the system. I think what you mean to say is that no small robot does, but really this has nothing to do with x86 itself and everything to do with there not being an x86 CPU with the requisite power envelope except for Atom, which has never really had a development platform suitable for being used in small-scale robots.

As for you comment about GPUs, they have that already... they're called FPGA's/ASICs. You run a GPU when you want to be able to easily and rapidly develop software and the cost in power vs. an FPGA implementation is outweighed by the ease of using commodity tools like OpenCV or nVidia's own CUDA-accelerated implementation. Even then, their Jetson board gets you a Tegra K1, which has a full Kepler SMX on it plus 4 Cortex A15's in a 2W power envelope.

While FPGAs are getting better all the time, they're still too power inefficient for robotics except for very particular computationally intractable problems. For vision processing, there's no reason to use an FPGA instead of a GPU, so long as you pick the right one.

Smart phone and tablet GPUs are changing all the time, so leaving graphics out of the Edison package gives developers more options. Further, with so many form factors and corresponding resolutions to pick from, there's no one RIGHT graphics solution. The Edison lets the hardware developer integrate Intel's CPU into prototype hardware without them having to worry about how to integrate a complex chip package that really requires a full production run to assemble; this allows them to focus on prototyping the application specific hardware.

Do you actually ship robots to customers, or are they merely prototypes? Aside from reluctant concessions to JAUS compliance, and other similar tough pills to swallow, I haven't seen anyone choose to use X86 on a robot slated for production.

We ship many robots to customers. But these aren't $100 vacuum cleaners. They're... quite a bit larger than that. An example: https://www.youtube.com/watch?v=N_JsLOfm1aI (disclosure: I do not work for CAT, I do work for a company that makes the autonomy system)

Again, you need to be more specific with your scale. If we're talking something the size of a Roomba, then of course you aren't going to use a 200W computing system. But if we're talking something the size of a car, or a small building, that's a completely different story.

And I have to disagree with you about the FPGA as well. I can't really get into specifics because a lot of it is NDA'd, but lets say we can do a lot more for a lot less power (and with MUCH less latency) on an FPGA then we can on a GPU, although Tegra K1 and its successor might wind up changing that a bit. That's not to say there aren't lots of really useful uses for GPUs, there are just specific problems that lend themselves very, very well to FPGA implementations.

Yes, but now you're ignoring the entire context within which I made my comments. Your robot can do a lot when it has an INTERNAL COMBUSTION ENGINE to charge the batteries. I was clearly dictating a context in which that was not an option, one in which batteries alone provide the power. When you have an alternator on an internal combustion engine providing the power, you can waste power on anything you want. Intel chips, FPGAs, ANYTHING. When your entire system is battery powered, and has to perform a long-term mission anyway, Intel chips and FPGAs aren't an option.

Everything you said is true for really serious robotic development. I don't think Intel is targeting that however with the Edison are they? The are more about marketing to IOT and wearables with the Ed (at least that is what I've seen on their website).

Thanks Folks! This post and the subsequent discussions are firming up my perception of the utility and potential for applications of this tool. Also very stoked that Wolfram has announced support for this platform.

Honestly, I'm more than excited to see this and can't wait to figure out how to use mine (got it at Maker Faire). I'm a fan of the idea for the raspberry pi, but I never really found a use for it besides having it. I'm sure there are people sitting at their computer desk in shock saying "How could you not have? It's amazing!" and I'd have to agree with them, but for robotics (what I mostly do) the Edison seems like exactly the right tool for the job. The 9DOF board as well as the I2C board add on's makes the Edison perfect for the robotics application. With the raspberry pi I had to work with a small amount of GPIO pins as well as the OS as well as finding a way to connect to WiFi among other things. Again, there probably people sitting there saying "Wow, you don't know, it's waaaaayyyyyy easier than that and you're doing it wrong" and if I am then my bad, please correct me. But I can't wait to get the edison into a huge project of mine......maybe a Gundam suit or a sentry from Destiny... or something crazy like that. The fact that it already supports Arduino is awesome and makes it a lot easier to use out of the box. Anyway, SparkFun it was awesome talking to you guys at Maker Faire. Can't wait to see some tutorials for the Edison (cause all that's on Intel's site it basic Arduino stuff and while that's great I'm sure there's more you can do with it than blink an LED or scroll LCD text.) Note: After watching the Intel getting started video for the Arduino add on board I noticed they used a 12v wall power supply for the Arduino board. I found this to be a little wierd as most other boards, including Arduino, run off of a 9v supply. After some research, I found the Arduino add-on pdf page on their support site says it will work with anywhere from 7v to 15v, so my guess is a 9v supply should work. I will test this myself and post something about it on the Edison support site. Anybody else know anything on this? Thanks, -MiKe

I feel it needs to be pointed out that Intel's PR folks called one of their products "The Edison." I'm assuming it's a reference to the turn-of-the (previous) century American permutation expert, Thomas Edison. I had assumed Intel was staffed by people who know how to name a product based on symbols that inspire thoughts of innovation and ingenuity. If they want to be associated with electrocuting cats and trial-and-error-style "engineering," I suppose they nailed it.

See: Comment Guidlines #3

And besides, Tesla is becoming such a mainstream name...

Many apologies. I was not trying to troll. I'm not sure how Tesla factors into why Edison chose such brute-force approaches to problem solving. (Yes, I know about The Oatmeal. He didn't really change the way I thought of Edison since I first learned about him in third grade.) I will avoid future comments regarding large companies making such naming faux pas.

I think Intel's biggest failure in causing market confusion on exactly what this is for was not making it explicitly clear that there is no GPU, nor is there any way to get a video signal whatsoever out of the board. An SoC without a video signal is very clearly not intended to be interacted with directly as a computer. Heck, the only reason the Rasberry Pi is even remotely useful as an SBC with a CPU as weak as it has is because it has a very, very nice GPU.

Once I realized that, it was immediately clear that this was intended to be more Arduino on Crack than a viable SBC competitor. The fact that its default programming mode is via the Arduino IDE should have been a pretty big hint, but confirming there was no GPU was basically a dead giveaway.

As to people looking at clockspeed as a measure of relative performance... that's just foolish. The ARM11 in the RasbPi is something like 400% slower clock per clock than the Atom, forget Performance/Watt. And there are two CPU cores in the Edison. Even with a 200MHz clockspeed difference, the Edison is still much, much faster at pure number crunching.

The Edison is really meant for applications where you'd use a traditional micro-controller like an Arduino or bare ATMega/Cortex M, but need a lot more number crunching ability supplied by the much more powerful Atom cores, and the task-management facilities of a full embedded OS implementation. As you said, think robot control hardware/PLC applications/industrial automation.

The potential Performance/Watt processing power was precisely why I was intrigued by the Edison platform; I had assembled a 5-element cluster of Atom processors prior to this; at the time, the only ready-made solutions had GPU alongside the CPU, inflating the amount of power it used by multiples (can't remember the exact numbers). In the low-power category, the Atom still had one of the highest PPW around, and the x86-compatibility meant low barrier to entry for the software team. We didn't need the GPU, however; access to the OS was through a terminal. All we need it to do was math, and lots of it. I would certainly like to see a benchmark on its computing power.

Me too, actually.

I (accidentally) left one of these idling on my desk overnight on an 850mAh LiPo. It's been running most of the day, too. Probably 18 hours or more on that battery, which wasn't fully charged at the outset.

At 4V, it idles below 30mA. That's pretty respectable.

I think you missed the next 3 sentences of my post, it which I basically said exactly what you just did; vis that Edison gives you a lot more processing power, as well as the flexibility of the task-management capabilities of an embedded Linux distribution.

Although you'd be pretty amazed what you can fit onto an ATMega equivalent; Mint's robots fit an entire SLAM implementation into it with about 0.25m accuracy, although the task space is obviously only the size of a standard room.

I didn't miss ... I was just objecting to dismissing the solution of a more powerful platform by equating it to something very very limited ... hense the quote I was objecting to.

I made the point that this additional capability greatly advances applications past the limits of Arduino, so much so, that they are hardly equivalent.

Thinking too fast ... High resolution stereolithography for 3D printer robots, and Stereo Vision Processing for ground based intelligent autonomous vehicle robots and flying drones/UAV systems.

I think you're a bit ambitious on stereo vision processing on anything greater than ~240p. OpenCV running on a 2.5GHz Core I7 can only manage a few FPS doing rectification and a disparity map on a 720p stereo image without GPU assistance. An Atom is definitely not going to keep up.

Actually for most applications, other than collision avoidance for high velocity autonomous vehicles, a few FPS is all that is needed to integrate object detection/avoidance and to provide realtime GPS/inertial location/heading corrections.

And interestingly enough, also allows similar processing across multiple location/heading ultrasonic range finder samples to construct 2D/3D object maps using techniques similar to ultrasound/MRI.

Plus odometry from multiple environment sensors.

That is a HUGE leap forward over what an 8MHz Aurdino is going to do.

Sure, but then I can use a Jetson and get nearly 60FPS @ 480p color for only an extra Watt of power. And still have 4 Cortex A15's to do things other than the image processing. My point is, there are much better solutions in terms of Performance/Watt for doing image processing than using an Atom that doesn't have a GPU.

Also, Drones/UAV's fit pretty squarely into the category of 'fast moving vehicles'. Speaking from experience, even on the ground 2FPS image data for navigation isn't very useful if you're moving much faster than 1-2m/s.

Your comparison says 40 pin connector, but isn't it a 70 pin?

For me that is the only drawback, I mean I am finally OK soldering SSOP ICs onto my custom PCBs, this new socket seems like it would make it tough to solder the mate for it to your custom PCB.

I just get the feeling this was meant more for small startup businesses to quickly create their custom hardware to mate to the Edison when making new products and not really for experimenters. Or if you want to mate it up to a Sparkfun board or other competitor's board that meets your needs, but not really design any custom hardware yourself.

Just sayin' I could be totally wrong on this, but I was very enthused about it until I saw that connector.

That's where breakout boards come to play, and the whole Arduino wrapper to allow exisiting low pin density shields.

The 0.4mm pitch connector is slightly painful ... but easily done with a temp controlled hot plate and hand stencils. Or by dragging a large solder ball across the highly fluxed pads with an iron, tacking with a precision iron and stereo microscope, and reflowing in a table top oven or on a hot plate.

I've been doing high density FPGA BGA's that way for more than 10 years. Not your normal less than electronic tech skills ... but this world has MANY hobbiests that can easily learn to take on more difficult projects ... including many retired industry Techs and Engineers. I've shown high school robotics kids how to do it in my kitchen, including reballing FPGA's and other chips, like are necessary for repairing gaming systems.

Oops. Good catch. I fixed it to say 70 pin.

You're not liable to hand solder the mate for this thing, but I could easily see a hand-stencil with touch-up going pretty well. That's how our protos have been going together.

Nice post, and exactly what I've been trying to convey to those comparing it to the RasPi. I think the difference is if one comes from the embedded side and sees this as a much more powerful embedded SoM, rather than those coming from the desktop side, and trying to envision this as a small SBC. Obviously it doesn't compare well as a small, cheap SBC.