About the Author

Jesse Brockmann is a senior software engineer with over 20 years of experience. Jesse works for a large corporation designing real-time simulation software, started programming on an Apple IIe at the age of six and has won several AVC event over the years. This is the last installment in the Spatial AI Competition Series, thanks for tuning in everyone!

Read the first and second blogs if you are interested in or need to catch up on the logistics of this project!

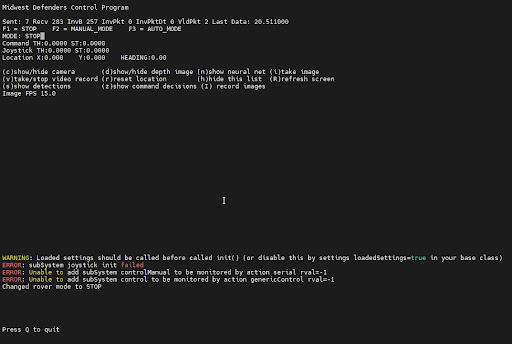

Curses based data display and rover control program

Continuing from where we left off...

A run datalog file is created during each run that logs the x and y position of the rover, along with the relative positions of the markers during the run. This could be used to generate a map with the locations of each marker. Due to the noisy nature of the location data of the markers, some higher level algorithm would need to be used to locate the markers with a high degree of certainty based on run data from multiple runs. Location data is collected using encoder output and heading data from the BNO-055.

Example Run Data

rover: 499.995667 499.780090 356.750000

obstacle: marker -31.577566 -145.256805 912.055542 0.993661 0.049720 0.038363

end

rover: 499.995667 499.780090 356.750000

obstacle: marker -28.925745 -146.694870 895.138916 0.995610 0.050098 0.037815

obstacle: marker 189.767776 -114.743301 1912.000000 0.697999 0.030058 0.016747

end

rover: 499.995667 499.780090 356.750000

obstacle: marker -28.925745 -146.694870 895.138916 0.995610 0.050098 0.037815

obstacle: marker 189.767776 -114.743301 1912.000000 0.697999 0.030058 0.016747

end

rover: 499.995667 499.780090 356.750000

obstacle: marker -28.925745 -146.694870 895.138916 0.995610 0.050098 0.037815

obstacle: marker 189.767776 -114.743301 1912.000000 0.697999 0.030058 0.016747

end

rover: 499.995667 499.780090 356.312500

obstacle: marker -28.668959 -147.440353 887.192322 0.995123 0.052451 0.037603

end

Path rover navigated using markers and signs

An outstanding issue was to detect unknown objects and avoid them. Code was tested to convert the depth map from the Oak-D-Lite into a point cloud that could be used to locate obstacles.

Watch Jesse's Webinar with OpenCV about this project!

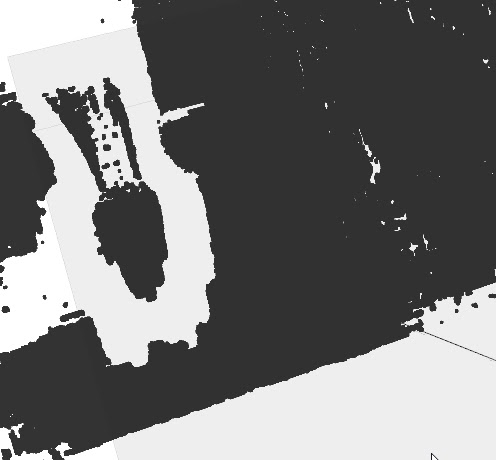

A hand can be seen in the point cloud created from Oak-D-Lite depth map

This result proved that such a solution may be possible, but much work would have to be done to interpret the point cloud to provide useful information for use with navigation and the timeframe of this project did not allow us to complete a solution.

Based on our outcome, it’s clear this is a well defined problem that can be solved using an Oak-D product. A final solution would likely use the depth output or a lidar to avoid obstacles not detected by the neural network. Use of this project could be a great motivational tool for kids interested in STEM.

The final video with a demonstration of the rover

At this time the source code for this project is not open source. This is due to concerns about any reused code such as the DepthAI example code, pdcurses source etc. However the source code will be provided to anyone who requests it as part of the review process for our entry into this contest. As time permits a proper audit of the code will be done, and released once any licensing issues are resolved.

Issues That Came Up During Development

The Lego Land Rover was not available in this time frame from purchase from Lego, so was acquired through 3rd party sellers. The conversion process from a native darknet output to a Oak-D-Lite compatible blob is a bit difficult, and I actually had to create two conda environments to get the conversion working. Part of the process is in tensorflow 2, and part of it is in tensorflow 1. If tensorflow 2 was used for all steps the blob could not be created due to unsupported options. The depth information reported by Oak-D-Lite seems to have many limitations and the main one I encountered is that a small object by itself in space can have very poor Z distances reported. These are often much further away than the object actually is. Our rovers would actually run into signs and the distance reported would be as much as two meters away. At no time while traveling towards objects did the reported distance ever become less than one meter. Putting a box, or another object behind the signs seems to solve this issue. Another issue is the autofocus Oak-D-Lite, which is constantly hunting and often gets confused and the whole scene will be blurry. Also, a rover is a poor place for an autofocus camera as the vibrations will probably prevent it from working anyway. As a result we used the fixed focus camera for the majority of testing.

Another issue was the power requirements of the Oak-D-Lite. It seems unstable without a splitter for the power/data. The Raspberry Pi V4 just doesn't have enough power to keep it running. The issue is intermittent operation. It might work fine for many minutes before failing and having to restart the program or even restart the Oak-D-Lite by unplugging and replugging in. Preferably, it should report a power issue such as the Pi does when underpowered. There also seems to be issues trying to debug when using the Depth AI core. Sitting at a breakpoint for a while will cause the process to crash due to issues with the various Depth AI threads. Finally, using a Lego based vehicle is not recommended for general use in this type of testing. Even an RC car of similar cost is a much better choice due to the frail nature of a lego drivetrain in a vehicle of this size.

We would like to thank Sparkfun for providing parts for the build and Roboflow for their help and use of the roboflow software during this contest. Finally, we would like to thank OpenCV, Intel, Microsoft and Luxonis for this competition.

Jesse’s Rover Scout

Jesse has plans to use the Oak-D-Lite and the code from this competition to compete in F1TENTH and Robo-Columbus events in the future.