Driving 20 hours to get to the salt flats of Nevada tends to start some interesting conversations. I started asking people: "What three things do you think will occur in your lifetime?" It can be something momentous like Whirled Peas, or it could be something small like the invention of indestructible socks. I got some amazing responses!

The following are three of my own predictions:

1) Cars will drive themselves. Realize that I was driving straight across Utah and Nevada painfully willing the car to take care of itself. Thank goodness we have cruise control, but we still have to steer.

I think most folks will agree we are headed towards automation. DARPA has been on it for years. Google is doing all sorts of stuff in the background. Even SparkFun enjoys the challenge with our annual Autonomous Vehicle Competition. For $300 we can build a (small) vehicle that drives itself around obstacles.

So will we have cars driving themselves around this year? Next year? I'm not sure, but I'm confident it'll happen in my lifetime. What does this mean? All sorts of good stuff:

- Less deaths on the road

- Less congestion

- More free time for me to read books and write code

- Better gas mileage

- Higher average speeds

- Extreme public transport

- Car insurance will become wacky

My nephew and niece don't know how to dial an old rotary telephone. Imagine a generation that never sees a 'Yield' sign. It's going to be fun!

2) Moore's law will slow down to a crawl. Bunnie Huang is responsible for this one. You can checkout his slides from his presentation at the Open Hardware Summit over here.

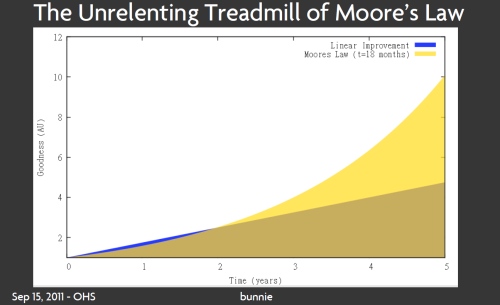

This graph shows Moore's Law as it is today - the number of transistors doubles every 18 months. Looking at Bunnie's graph, we see the blue area represents an awesome electronic gadget that we came up with today (through linear incremental improvement). The yellow area shows that, because of Moore's Law, our grand idea will be overtaken and out dated in two years. Our great idea was out stripped by a solution (or product) that was twice as fast (and perhaps half as expensive). We see this commonly with many consumer devices (cell phones, laptops, flat TVs, etc) where they are upgraded every 18 months or so.

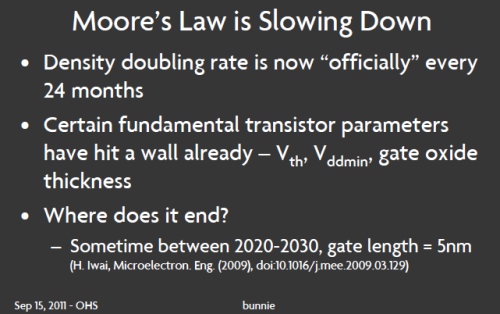

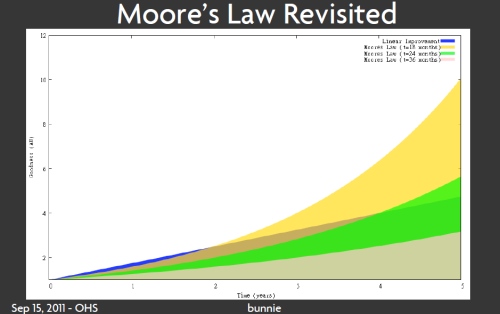

At least that's what I learned in school. It turns out Moore's Law has been revised. It's now every 24 months! And because of some limitations of physics that go way beyond my science fu (but I trust Bunnie any day of the week), Moore's Law will most likely continue to slide. So what happens when we re-graph things with 24 month doubling and 36 month doubling?

The yellow bar is the Moore's law we are used to. It shows our 'blue' idea being overtaken in 2 years. Look at the green bar. It shows that an improvement today will not be overtaken by Moore's Law improvements for 4 years. The beige 36 month bar extends out to 8 years!

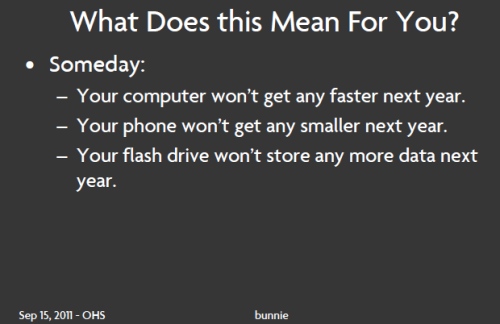

Imagine the day when our laptops don't get significantly faster the month after we buy one? The jump drive of 2021 may have exabytes of storage on it, but the following year, it won't be any larger. We won't be able to cram any more bits into the jump drives' enclosure. It could be the jump drive that I use for 10 years instead of getting a new one every few months.

I know the idea of ending of Moore's law is a heated topic (akin to Peak Oil debates) and there is no right or wrong answer. But I believe, within my lifetime that we will see a shift from 'discardable' electronics to electronics that are serviced, maintained, and held onto for many years. It's going to be awesome.

3) Day to day encounters will be feedback based. Realize that I am going across a spectrum here. #1 is a shoe in, #2 is a stretch, #3 is bordering on Sci-Fi. In fact, it's completely Sci-Fi. If you get the chance, I highly recommend Daniel Suarez' book Daemon.

It's a great read! Daemon is science fiction that occurs not in 300 years, but in the next year or two. With a little creativity, Mr. Suarez shows how open collaboration and custom fabrication can lead to surprising innovation (including cars that drive themselves). One of the principals of the book is the idea that people have personal ratings - something like a mix of Ebay stars, like buttons, book reviews, and google stalking. Before the end of my lifetime, I believe people will be rated by how they treat their community as a whole. Volunteer at your local food bank and you're probably going to get some thumbs up. Defraud someone when you sell your used car on Craigslist and your reputation is going to be marred. Before you and I even sit down for coffee I will be able to determine whether you are someone to be trusted or someone to be questioned. I'm not sold that this is a good thing or a bad thing, but I do believe we are headed this way.

We may not have the space in the comments below but I would love to hear what you think will be achieved in your lifetime? Is it technological or is it sociological? Will we be vacationing in Cuba? Will there be many more online currencies or just one? Will we leave footprints on Mars?

1 - maybe. for it to be truly safe, infrastructure must change. i.e. the road needs to communicate with the vehicle.

2 - nah. sub-atomic transistors are around the corner. a 5nm gate will be big enough to drive a quadrillion quarks through. no problemo. plus, development of organic computers is well on its way.

3 - nah. still kinda surprised by this. not everyone stares at their smartphone all day reading twitter feeds and facebook updates. social media is a big hot air balloon that's going to blow up in a couple years.

prediction 1 - doctors will be largely replaced by massive data sourcing computers (i.e. IBM's Watson). they will digest all the variables and spit out a list of most likely diagnoses.

prediction 2 - lithium will be the new oil in the future.

prediction 3 - stirling solar will become the most efficient means of renewable electricity.

prediction 4 - sparkfun will change the fact that the # symbol screws up my formatting.

Remember that lithium is resource, that, in the end, will be used up like any other resource, if not recycled properly.. Also, Bolivia, who has a buttload of lithium, aren't planning on selling most to the west. The last time they sold stuff to the west at a low price, they ended up paying the highest price: Poverty.. But, yeah. If used/treated correctly, lithium can become the new oil .. :D

Interesting post.

* Now remember most of the problems don't come from the technology side, but from society. Think about the many movies (true or not doesn't matter here) where the traffic lights are easily hacked and put a city under chaos... Do you think most people will trust their cars being driven outside their control? It may eventually happen, but not sure how long that will take to be acceptable compromise of risk/benefit for the masses. I wouldn't bet that will be in my lifetime.

* As of Moore's law, I am with you in the interest of reducing the pace at which gadgets are getting obsolete by a more reusable world. I do think the Moore's law physical limits won't be a big factor on that though: I dream about a society where everyone realizes the pollution, energy and actual cost of our current use'n'trash culture leads us. If we would ever have to pay for the actual impact on earth of our latest $5 USB gadget, we would not be trashing items as the speed we do. Moore's law may not be a short term limitation if quantum physics, photons physics and other technologies goes beyond what semiconductors can do today, but earth resources are bounded and probably hitting us way earlier than we think...

So.... the question that is on everyone's mind, when is AVC gonna be this year?

I actually hope that Moore's law slows down because then it will force us to think about speeding up computers differently. As of now it seems like every time there is a line of faster processors or faster RAM or bigger hard drives. When the software developers hear this, they start to create more powerful and resource consuming software. Take Windows(R) for example: Windows 95 took like 5-10 mins to start up when running off of an i386DX at 25 MHz, 8MB RAM, and 30-50MB of hard drive space. Now fast forward to Windows 7. My computer has a Intel CoreDou at 3GHz (64-bit), 4GB of RAM, and it uses 18GB!!! Guess what, it still takes 5-10 mins to boot up.

When the technology starts to slow down, software developers will have to focus on making more efficient code to make computers faster. Then if there is a technology break-through, computers will be amazingly fast!

1) Absolutely. I think even more likely than fully autonomous vehicles is grid-based ones (with a guidewire under the pavement), but one way or another, cars will have automated controls, soon.

2) Depends how you define Moore's Law. I've seen two different definitions: a) The number of transistors per square inch of chip doubles, or b) The "speed" (whether that's in MIPS or FLOPS, or whatever) of a given size CPU doubles.

If you go with (a), then yes, it's slowing and will continue to slow. But I'm not sure that's the case for (b).

More transistors on the chip are only part of the equation - there are other ways to improve performance of a chip - pipelines, multiple cores, etc. Then there's the whole quantum computing thing and wherever that leads. As well as the "next big thing", whatever it turns out to be - look here: http://www.computerworld.com/s/article/9219723/5_tech_breakthroughs_Chip_level_advances_that_may_change_computing for some possibilities.

3) Haven't read Daemon, but Charles Stross' "Accelerando" has much the same concepts in it. In fact, in that book, personal reputation becomes a commodity that is tracked (and traded) on exchanges much like the present-day stock exchanges. For those who haven't read him, Stross' writings are very apropos for this audience - a lot of his books (especially Halting State and Rule 34) deal with the future of the maker movement, and other near-future developments.

These two are so interrelated as the render the distinction moot.

The speed of any chip is limited by the time required to synchronize data across the two furthest transistors. Make the transistors smaller, and you can either do more at the same time, or do the same thing faster.

Not necessarily. First off, most chips are way less efficient than that theoretical limit - the actual time to perform an instruction is way higher than the time to send a signal all the way across the chip (most instructions require multiple steps, and take several clock cycles to implement). So even with the same exact transistor size, there's room for improvement by using those transistors in a more efficient way (compare, for example, the performance of pipelined processors compared to their non-pipelined contemporaries - the pipelined ones are significantly faster, even if the same exact transistor tech is used).

Second, what you stated is only true for transistors as we know them - if you can make the data travel between transistors faster (such as optical rather than electrical connections), you increase speed without making the transistor smaller. Or, if you replace the transistor with some other device (quantum computers perhaps?) the whole calculus changes. Finally, there are other bottlenecks in a typical computer system (such as the CPU to RAM bus), the elimination of which can significantly improve the speed of the device, even with no improvement of the actual CPU speed.

As I said, if we are talking Moore's law in the sense of size or count of transistors, then yes, it may well be slowing down. But that's not very interesting.

The author's thesis was that this would lead to the shift from disposable to non-disposable electronics. I don't see that. I think computers, music players, phones, etc. are going to keep improving in speed, size, storage capacity, etc. for a long time. Whether that improvement comes from smaller transistors or some other tech, who cares?

Two thoughts- most everyone can see an end to miniaturization, I think, because once you get down to picometer size, unless we can make computing devices with nucleons, individual atoms are it. If the only metric is transistors per sq cm, there is a hard limit to computational improvement.

But there are (I suspect) new programming paradigms, new things that have yet to emerge from interconnectedness that will make huge improvements in speed and information density. Further, there is likely a point where we are just not smart enough to directly program things, and improvements will come from learning and 'computational evolution'. So I am able to stay a technological optimist (and agnostic, in that I have no idea if or where we will hit hard limits or if or where we will make disruptive discoveries. The best part of this era is that information flows and technology is more accessible than ever before in history, which seeds the next steps.

I tend to agree with Hayek, that we have the conceit of knowledge, and we really don't know what motivated human minds will make of a world where there is vastly increased automation. Right now it stings, but I think that we can and must adapt, and need not be inert, victimized, or dependent on anyone else to rescue us. But this experiment is currently being run, so we will all find out.

I have sort of a beef about your interpretations of Moore's Law. If you are talking a planar, CMOS, silicon process, I can see that scaling won't take up beyond, say, 5nm gate lengths and that we would see no incremental gains in performance, power, or capacity.

However, if you look at the history of semiconductors, there are always predictions of the limits of memory, processor speed, feature size, and the like. What always ends up happening is that some improvement comes along to help stretch the curve out. Look up strained silicon, double patterning, immersion lithography, OPC (I could go on).

Now look at carbon nanotubes, quantum dots, memristors 3D integration, and any other crazy thing you hear about being the "future." Who knows in 20-30 years what advances will be responsible for making our gear faster, low-power, and with larger memory year after year.

I agree that mine is just another prediction bound to fail. But it makes for some interesting implications. Bunnie's twist (or at least my interpretation of his presentation) was that once we stop having the need to throw away our personal electronics it will increase the demand for collaboration and personal modification on a scale that we don't currently have. My cell phone is not really mine, it's what TMobile/HTC thinks I want/need. It's not. But there will come a day when Moore's law slows down and I'll have the capability to really make what I need/want.

There is a move in Complex manufacturing to retool a given tool in 10 sec etc... If this retooling moves to electronics - then individual boards will be data driven from pcb to placement (such that the 1-off price and the 100 price converge). Perhaps this next wave of personalized manufacturing (like printing before it) may open up the next horizon in more personalized and varied devices and EE applications.

I think the Moore's laws comments are exceedingly misleading in that they imply that as the number of transistors tops out it will also mean that the rate with which we're able to harness computers to execute our bidding is near the end of its growth curve as well. I think we all just intrinsically know that the exact opposite is the truth - we're just at the very beginning.

So that begs the question of how will we get past this limitation? Well, I think you can already see it action. My "day" job is selling enterprise software solutions - 5-10 years ago if you needed scalability you went and bought a bigger box or added resources to your existing box - so called "vertical" scaling. But now almost all scalability is focused on horizontal scaling - more cores, more virtual machines, more distribution - all of this leverages growing out across any specific CPU boundaries. So while moore's law, which only applies really to a CPUs, may be getting ever closer to its end game we're just getting started in understanding how to split jobs across distributed servers farms - As we've already started to see by the promising results observed in areas such as grid computing, big data, cloud computing, etc. the usable computing power that can be delivered using distributed systems is staggering and I don't think we'll hit the limit on that for a long long time. A man can only lift so much but the Egyptians still figured out how to build pyramids...

just couple things to add to this discussion.

it is true that this law was initialized to define the miniaturization of the transistor however when you look more closely at how this miniaturization comes in to being. it is through an expansion in knowledge that we are able to miniaturize the transistor so in this way it is possible to expand the scope of this "law". when the scope of this law includes all of the inventions that were predecessors to the invention of the transistor you can follow it back in time just about as far as you want to take it. when you evaluate what you are looking at when you expand the scope it appears that this law is an inherent property of human knowledge and if you then consider the implications that the cosmological science offers that the fundamental particles that make up our entire planet and like wise us as people are part of the big bang and that we are all expanding and that this expansion is in fact accelerating as we have recently learned and we then put the graph of the expansion of the universe next to Moore's law and do some normalization you will see they are far to similar to be chance. now we consider what is being said about the transistor reaching some constant and not being able expand any more. what i would say to that considering what i have implied i would say you are not considering the whole picture and that there are variables in the equation that have been left out such as paradigms in programming or the work that is being done in with optical computing. i guess what i'm saying is that Moores law is not tied to the transistor because the transistor would not have come into being if the knowledge about it had not existed before hand and it does not get smaller based only on the variables its based on our expanding knowledge of what is really going on at the fundamental levels of matter. but don't take my word for it investigate yourselves criticism is always welcome. oh great topic by the way very cool stuff

Cars that drive themselves are cool and all, but I would really like to see cars equipped to interact with coils in (or on the side?) of roads for both braking and electromagnetic induction. Imagine if just by using the roads you could lighten the economical load required to provide energy to stoplights (or whatever roads will need to power when stoplights are obsolete) as well as saving wear and tear on your brakes?

Regarding CPU speed (as opposed to transistor density) - its not truly the case that they aren't getting faster. Its that they are getting faster in different ways. Check out the discussion of the LMAX Disruptor technology, capable of 6M TPS on commodity hardware using a single thread, and written in Java. It was very carefully crafted to minimize locking and accessing horribly slow RAM.

I wish there was a way to compare Win7 to the code that does multi-beam and spotlight and synthetic aperture RADAR in a modern fighter plane (usually G5 PowerPCs).

A way to compare apples to oranges? Sounds like a slippery slope to me. Not sure where the bit about PowerPCs comes in; SAR had been around for a long time before the G5 came about, so I can't see how it would be 'usual' for them to use that chip instead of the custom DSPs they actually use.

As regards the self-driving cars: I can't see it for a looong time. On top of the practical considerations, lots of people just won't want it. I know I feel a bit frustrated by the AT that I currently drive and can't remember the last time I touched the cruise switch (and I live in northern Canada; I drive 90 miles to work and come to one stop sign, and journeys of 700-800 miles are not out of the ordinary.) I know not everyone cares as much about it as I do but there are a lot of us out there.

A way to compare code that has been worked on to get the greatest speed and efficiency from the hardware compared to something like Win7 laden with legacy details dating back to the first purchase of a ripoff of a poor excuse for an OS. And who knows what else. Why does it take 45 seconds to check my Java version? Sumthin ain't right.

And yes, PowerPC. Cell with all eight vector units is a GP processor and faster than the DSPs at the same time. You will find G4s, G5s, and Cells in lots of high-end military and space hardware. Sony used a custom Cell with only three active coprocessors for the PlayStation. I kinda wish Apple had stayed with G5. A Mac with a quad core Cell? 32 AltiVec units? Nearly instant results in Mathematica? What comes after "supercomputer", oh, in the SF vein, Colosalcomputer of course. Isn't that right Dr. Forbin? Woohoo!

I really don't see computers driving cars for a good, long while. That is, unless it turns out people enjoy waiting for the car to decide the paper bag in the road isn't a threat. And the car can keep control during unexpected hydroplaning. And it can reasonably handle tailgating fools. And, well, you get the idea -- their are poorly-defined requirements for actual driving.

More important than any technological potential or challenge, however, is the simple fact that people are intrinsic to the performance environment. Judging from my daily commute, about half of non-automated drivers will refuse to let automated drivers merge. And, about half the automation-enabled drivers with automation engaged will turn it off the second they get passed by the cunning, non-automated competition.

The problem with automating cars isn't technology, it's social/cultural. Good luck fixing that "soon" unless you arm the computers and let Darwin sort it out.

On the other hand, at least computers will use their turn signals. :)

/tongue-in-cheek

What does pound sign do? ## it is not doing anything...##

oh wait theyre gone.

Daemon was certainly an interesting book, and the sequel Freedom wrapped it up nicely. Another near future SF author to read is Vernor Vinge. Raindbows End actually has quite a few concepts rolled into it. Automated cars, large social networks and even bio-engineering. I highly recommend this book, even over Daemon, for a glimpse of just what the future may look like. It even tackles part of the maker aspect of the future.

I've read Rainbow's End - it's also very good. I relate to the idea that some people will want to drive cars for the pleasure rather than turning over all aspects to automation. I'm reading Vernor Vinge's A Deepness in the Sky. It's hard to start, but now I'm completely sucked in.

I just realized Children of the Sky is out this month.

I think by the time we hit the point where the betterment of technology comes to a halt, quantum computing will hold more of the market than the version currently under research.

Popular topic! First, I would predict that you, or at least members of your cohort, may have very very long lifetimes. Between the life extension folks, nano/bio engineering for repair work at the molecular level, and nano-wire sized infiltration of the brain to pull off a backup and run on hardware instead of wetware, the possibilities are boggling. Interstellar exploration becomes feasible. Just clock yourself way down for the slow parts, and run a couple million times faster than a human when you need to think, or spend a couple hundred subjective years in virtual worlds. Now you need to consider the effect of 300 or 1000 year lifetimes on politics and behavior. And of course, if you live 300 years and keep up with things, you get to be part of the next advancement, etc. Someone will be the last person to die of natural causes.

On computer performance, there is tremendous improvement possible after the end of Moore's Law. I wish there was a way to compare Win7 to the code that does multi-beam and spotlight and synthetic aperture RADAR in a modern fighter plane (usually G5 PowerPCs). It would be a real wake-up call.

The fact that there are actually things my old 1MHz 8 bit Apple II does faster than my dual core 2.6GHz Linux box should tell you something. The layers of abstraction always exceed the computing ability in these consumer and hobby systems. Even the Arduino, as anemic as it is, can afford high level abstractions and interpreters when dealing with the speed of normal human activities. And why does Win7 take 50 seconds to check for my Java version? Sometimes I want to take one of my ARM9s, turn off the memory manager, load and lock the cache with my best code and see what it can really do. Or should I load Android and play Angry Birds? There is that one with the three pigs and too much glass and stone. Maybe if I hit it just in the joint between the.......

2) This is an interesting topic. I see computers having 8 cores, with each core effectively acting as 4 CPUs (I am assuming 386 4 8086 mode). So the processors could act as 32 CPUs and I have no idea if hyperthreading would be multiplier on top of that. That is just in the x86 line. Add to that GPUs, and other dedicated processing units.

Technology is always advancing and I do believe we are now at a point where electronics will change drastically in the next 5 to 10 years. If memristors are integrated into standard electronic devices say goodbye to charged gate memories and static rams. The space savings in ram chips will be enormous. Then we have the research of being able to actually stop a photon. The top researcher believes they can do this on the miniature scale. That is just nuts. So, the time is ticking before one of these break out and reduce heat, space, cost, etc. I would hazard a guess and say that computational speeds will increase and the rate will possibly go faster at some point.

So, maybe a better method for tracking computer technology increases should be the kilowatt vs the processing power.

And I thought this was going to be about AVC 2012 xD

Oh don't worry - we'll get there soon :)

IC's will start to grow in the 3rd dimension, and Moore's law will continue.

I think as a vision of the future, Peter Hamilton 's "Pandora's Star's" isn't unrealistic, psudo imortality, and being able to "re-life" is a comfort.

I believe we'd learn how to make wormholes before interstellar travel. We'll have computers implantable in our heads. and those computers will have the latest greatest interconnectivity that will enable the "ratings" that everyone thinks is posible, that and apple will go finally go bankrupt.

Even though scaling has been overblown for years (remember the 1 micron "wall"?), it will eventually catch up with us. Still, I don't think it much matters anymore. We've "scaled" performance and storage to such extents that it's no longer such a priority. At about the Core 2 Duo release, processor performance pretty much plateaued. Sure, we could use more cores, or maybe some more Mhz, but really it doesn't add that much meaningful performance for people, even for enthusiasts. The same thing with RAM and storage. We have laptops with 8GB of RAM, and 1TB hard drives. Exabytes might be nice, but even having the bandwidth, time or internet, to utilize that space would be hard to come by. The only meaningful improvements we'll be seeing for a while is in solid state storage, and maybe video graphics performance.

Nate the new Stephen Hawking. I don't know if I'm ready for that one. You're good, but I don't know if your that good!

While I would love for my car to drive itself in traffic, I think the major problem is obstacle detection/classification/avoidance at highway speeds.

For instance, animals crossing the roadway, pedestrians crossing / walking along roadway, fallen branches and rocks etc.

Detecting the various objects is tough enough, but classifying them as to the appropriate avoidance action is tougher still.

I.E. if a tumbleweed crosses your path no action is required, but if a basketball does, you had better be looking for the kid following the ball.

Also, when the lead car of several that is being driven automatically strikes a deer in the roadway, can the others behind react in time to avoid a pileup?

one way to solve that problem may be short range wireless networking. If every car within a few hundred yards "saw" the deer at once, they could coordinate their action. A passing car could even 'warn' cars behind it of things that may be potential hazards before they're hazards that require immediate action.

I'd hate to be in a network car getting hacked though.

And don't forget, roads can be smart too and transmit traffic or obstruction data up the line to cars that are on their way.

Roads are already very expensive, and often poorly maintained.

Who pays for the additional cost of the installation and maintenance of all the required sensors and transmitters to make our roads smart?

I'm a big believer in "smart roads" for vehicular guidance. Who pays for it? Same people that already pay for roads - taxpayers...

Do I expect every road to magically become smart at the same time? No, not at all.

What I expect is major cities, that have the worst traffic problems (NY, LA, etc.), start to put in smart roads in those congested city centers. At first, they'll provide incentives to drive cars that are on automatic in those areas, then, over time, as more and more cars get the technology, they'll make it mandatory to use in certain parts of the city at certain times.

Slowly, over time, the network of smart roads will grow.

For a recent example of this sort of gradual implementation of infrastructure, look at the spread of EZ-Pass over the last two decades or so (yes, I realize that EZ-pass was way cheaper, for both the government and drivers than smart roads, the analogy stands).

Two areas that I think are better to focus on instead of smart roads and self driving cars are telecommuting and community design.

If more people telecommute, they avoid the energy costs and time wasted while driving.

If communities are designed so that people can walk to most of the places one needs to do business with, there is little need for a personal car. Visit Canberra Australia for an example.

Good point. With more and more shift away from a manufacturing economy and towards a knowledge/information economy (at least here in the US), there's less and less reason to physically go to work. If more people were willing to handle business matter remotely, cars would be far less of a problem.

Sadly, I work in a completely information-based industry (I'm an accountant - all I ever deal with is data), I run my own company (with a partner), and I still can't telecommute - the clients insist on meeting face-to-face, the employees need daily contact, and there are still too many physical processes involved. So I drive 45min-1h to and from work every day... Sigh...

So... that's one hurdle we need to clear. lol

Moore's law (well actually it's more like Moore's observation) is only about the decreasing size of circuit elements on silicon. There ARE other ways to increase density, such as using one more dimension in IC construction (Spock, in STII: "His thinking is two dimensional Jim"). When we finally figure out how to make IC's on a QUBE of silicon things will get real interesting!

The idea of a personal rating system is not new in this book - it was also pretty integral to the story in Cory Doctorow's "Down and Out in the Magic Kingdom" - in which "Whuffie score" was the personal rating system, and which the score is instantly accessible to everyone, due to ubiquitous use of ocular implants and augmented reality. Imagine the scores floating above the heads of everyone you meet!

Sorry - I should have phrased it differently. I did not intend to say that he was the first to have the idea. The idea is not mine so I was trying to give credit to Daniel as giving me the idea.

I think maybe more important may be how these drastic changes in technology can change US.

It can be said that the internet we have today is an extension of our senses; memory, information, imagination, etc...

At what point would these differences change us at a basic cognitive level, to the point where we would share little in common with other humans 100 years in the past?

I think, to be a trans-human or post-human, is the most important change the future brings.

After all, isn't the need for becoming sufficiently "godlike" the most human trait of all?

1) Cars and trucks will eventually drive themselves, but it won't get there with the way the world's education system works. To all you hobbyists, whether you like it or not, get a college degree in engineering. It helps you find a job, allows you keep a job in a recession, and puts you on the path to make the dream of these cars driving themselves.

I cannot stress enough to never look for glory in engineering, because you are already there. Just remember that what an engineer does (even if it's verifying 1 circuit for a customer) can make a huge impact on the world.

2) One thing that is always mentioned in EE in college, but never gone beyond that is the types of silicon structures that can be created. Mainly the memristor and stacking transistors in a silicon strait in 3D orientations.

Coming from a RAM background, I saw a lot of changes. One of them, being the announcement that HP was able to create the legendary 4th basic element in a circuit, the memristor. I have since changed careers, and it still amazes me that HP is nearing consumer production of the memristor, and they hope to have a product out in 2013 with this element in it.

Now not a lot of people fully understand the memristor, or how it works (I still don't have a clear understanding of it works, but IEEE Publications is helping with that). But it is innovations like this that causes Moore's Law to become obsolete.

Another point that makes Moore's Law obsolete is it was based off a 2D plane of transistors, and never took into account volume of a 3D structure. Intel has devised a way to continue to condense their chips by moving everything in the +Z plane. Which also makes it obsolete.

3) I work at a company which already takes feedback from customers and employees to try to better the culture and products. When you hobbyists can, ask for help from others, ESPECIALLY ENGINEERS! And take the help with a smile. There is one thing us engineers love to do, and it is to teach others how things work.

/rant

I totally agree with Items #1 and #3, however I think (and hope) Moore's law will keep marching on.

I've been saying for a long time: eventually we'll have the ability to up-vote/down-vote people while we are driving. If someone cuts you off: down-vote, a friendly car moves over to let you merge: up-vote. Maybe people who are rated as really poor drivers will be highlighted red in our windshield HUD to notify us of potentially dangerous/poor drivers.

I think we'll also have the ability to "drop" messages off with other cars. It would be nice to send someone a message just letting them know their tail-light is out. Of course, this leaves the possibility for abuse, but the internet is already vetting some good techniques for community control.

Using something like Apple's new Siri, a few cameras with license plate detection software, a mini-projector, and little car wifi hotspots and we're almost there.

Driverless cars are a given, they have all of the laziness (convenience) of public transit with the selfishness (hygiene) of personal transport. PLUS, the governor of Nevada recently signed a law requiring the Nevada Department of Motor Vehicles to “adopt regulations authorizing the operation of autonomous vehicles.”

I'm gonna call the Moore's Law prediction right but the conclusions that Bunnie drew from it are probably not accurate. Moore's law only describes trends related to transistor logic, but exponential growth in technological development was happening before transistors as well, Moore's Law was supposed to stop in the 80s or 90s but now it looks like it may continue until 2015 or 2020 and after that we should be positioned for another paradigm shift (like from electromechanical computing to relays or from there to vacuum tubes) This should continue until we hit the physical hard-limits of computing (size) with electron-spin, quantum or light computing.

I dunno, that might all be rubbish too, hard to say. All I know is that I'll be perfectly happy if things slow down around the time that my femtotech iPod weighs less than a penny and can store every song ever recorded twice over.

this has absolutely no relation to this post... but I'm kinda desperate and in need of an answer... I apologize in advance for posting here...

I'm currently working on my thesis and I'm using some of SF parts. I would like to use some images here (printscreens and then modifications). But I would like to know if I'm allowed to or if I'm allowed, but have to cite or something...

Again, I'm sorry for posting here... I haven't slept that much lately and need to finish my thesis ASAP...

thnx!

You are more than welcome to use any of our images or descriptions of our parts so long as you put "From SparkFun.com" or something along those lines near it. Best of luck with your thesis!

Thank you! I'm citing everything so that you guys get all the credit! Most appreciated! :D

And thank you! I'll let you guys know how it goes! :D

What do you think the social consequences of vastly increased automation will be? A welfare state or ???

(Bad healthcare can only do so much, so fast)

In other words, how will people afford to buy all the fun stuff without jobs or money or the sense of societal identity currently provided to them by "their" (I'm speaking figuratively, of course, as they dont really own anything) jobs?

Will the government outsource American-care to some other country to take care of them cheaply? (privatization)

Will homeless people be able to vote somewhere? Or will they just vanish? (the soylent green scenario)

How will horse drawn carriage drivers afford to buy all the fun stuff without jobs or money or the sense of societal identity currently provided to them by "their" (I'm speaking figuratively, of course, as they dont really own anything) jobs?

I don't know. Maybe we'll eat all of them? (soylent green??)

You're joking, right?

Sure there are issues with the advancement of science and technology, but overall the result has been good. Somehow, I have a feeling that mankind will find a way.

Funny because driverless cars already existed once. They were called horses. People could sleep and the horse would know its way back home. Then we replaced them by machines that, while much more efficient, needed much more input from the user.

The point of increased automation is to increase the value of human labor. Just like Excel hasn't eliminated accountants so too will future people have far greater and greater tools. Slowly bad jobs will be eliminated and quality of life will improve because of cheaper costs. In the middle ages more than 50% of the world population were farmers. Are there less farmers now? Yes, are there less jobs because of it? No

If there was a Moore's law for social change it would be flat over a (human) generation. None of the above will happen. Sadly we will continue with the most expensive but worst health care in the industrial world and other social problems through our lifetimes.